This article refers to the running of parallel PHOENICS on computers running Microsoft Windows OS. It requires that the user first installs MPI (usually Microsoft MPI), please refer to chapter 6 of TR110 if you require instruction on how to prepare your workstation(s) for running parallel PHOENICS.

The simplest way to launch parallel EARTH is from the VR-Editor.

When a parallel PHOENICS licence has been purchased, an additional menu item, 'Parallel Solver', will appear under the 'Run' menu option in the VR-Editor. Once selected, the parallel solver option launches a dialog box where the user can specify parameters that will affect how the parallel solver is run.

The first consideration is the number of processes to run the solver across. This is selected from the pulldown combo box at the top of the dialog. The pulldown element allows the user to select up to 64 processes incrementing in steps of 2. The user is free to type any positive integer into this box, for example to select an odd number of processes.

The 'Cluster Host List' portion of the dialog enables the user to select which hosts in a cluster are used for computation. Here there are three options,

This mode of running the parallel solver will always launch the root process on the local machine and a convergence monitoring window will appear on screen (as per the sequential solver).

A MPI configuration file is only used to fine tune the distribution of processes amongst the different computers in a cluster, so will not be required if running on a local computer only.

When using the default automatic domain decomposition, parallel PHOENICS only differs from sequential when the solver is run: problem set-up and post-processing of results can be done in exactly the same way as for the sequential version. A case that has been run in sequential mode can normally be run in parallel without any changes being made. The output from a parallel PHOENICS simulation will be result and phi files, having the same format as for sequential simulations.

It is also possible to by-pass the automatic domain decomposition algorithm, and to specify how you want to decompose the calculation domain into sub-domains. This can be done by selecting 'Manual' in the Domain decomposition group box on the Run Parallel solver dialog.

When you first switch to manual decomposition the arrangement will match the automatic decomposition that would otherwise be used. Normally automatic decomposition will suffice, but there may be occasions where a different decomposition is preferable to avoid a split at a critical location.

For information on more detailed control over manual domain decomposition, see the Encyclopaedia entry on the PARDAT file.

In a Command Prompt window, if the EARTH executable is launched directly, then the sequential solver will be used; to run the parallel solver, the program name 'earexe' is used as an argument to the MPI launch program mpiexec.

A script RUNPAR.BAT [nnodes] is provided. The optional argument [nnodes] indicates the number of processes to be launched on the current computer. The default is to launch two processes.

For example, RUNPAR 2 will execute the MPI command:

mpiexec -np 2 \phoenics\d_earth\d_windf\earexe

If a cluster has been defined by smpd, then the command will execute on two processors in the cluster, otherwise it will launch multiple processes on the local machine.

There are also 'run' commands which can be used in conjunction with configuration files, for example 'runcl4' uses the configuration file 'config4'. Config4 lists the PCs and processors to be used (see above Configuration file section above). The script runcl4 will execute the following command:

mpiexec -configfile \phoenics\d_utils\d_windf\config4

In most cases, it is sufficient to use the automatic domain decompostion which is provided for within the parallel PHOENICS solver. In some cases though, the user may prefer to choose how the domain is partitioned, ie apply a manual decompostion. To do this the user can either supply a file PARDAT in the working directory or the user may add PIL settings to the Q1 which will describe how the domain is to be partitioned.

Simply put, the PIL logical LG(2) will instruct the splitter to by-pass the automatic domain decomposition, and split the domain according to the settings defined in the IG array as follows.

IG(1) specifies the number of sub-domains in the X-direction;

IG(2) specifies the number of sub-domains in the Y-direction;

IG(3) specifies the number of sub-domains in the Z-direction.

For example, to split the domain into 4 sub-domains (2 in each x and y directions and 1 in z), the following statements must be set in the Q1 file:

LG(2)=T IG(1)=2 IG(2)=2 IG(3)=1Warning:

When running across a cluster, the run will attempt to launch an instance of the solver from the same location on each of the compute nodes. If the default locations are used, this will be C:\phoenics\d_earth\d_windf\earexe.exe. If a Private Earth is to be used, then this also should be copied to the equivalent directory for each of the compute nodes.

When running across a cluster, it is important to consider the working directory on the compute nodes. This is because, by default, mpiexec will attempt to launch the process in the equivalent directory on all the workstations. So, if on the head node you are working in c:\phoenics\myprojects\projectbeta then this directory should also appear on all the workstations in the cluster otherwise the run will fail.

As it can difficult to always remember to create the working directory on all the cluster workstations there is an alternative. One can set up an environment variable PHOE_WORK_DIR on each of the cluster to point to an existing fixed directory

e.g. PHOE_WORK_DIR=C:\phoenics\mypar_runs

Then all processes (aside from the launching process) will write their output to this location.

PLEASE NOTE: The use of PHOE_WORK_DIR is not recommended if you are likely to make multiple parallel runs simultaneously. This is because the second parallel run (and subsequent runs) will overwrite the working files of the first.

The above methods of launching the parallel solver do not allow the user to fix the number of solver instances on each workstation. If you want that level of control, then the user will need to use the MPI Configuration file (see below).

The MPI configuration file option gives a more flexible way of launching the parallel solver. Assuming we have PHOENICS installed on each computer in the cluster, the following config file will use the public earexe.exe to run a single process on each of the four computers.

-np 1 -host cham-cfd1 c:\phoenics\d_earth\d_windf\earexe.exe -np 1 -host cham-cfd2 c:\phoenics\d_earth\d_windf\earexe.exe -np 1 -host cham-cfd3 c:\phoenics\d_earth\d_windf\earexe.exe -np 1 -host cham-cfd4 c:\phoenics\d_earth\d_windf\earexe.exe

The following example launches two processes on each of two computers where PHOENICS is installed only on the head node:

-np 2 -host cham-cfd1 c:\phoenics\d_earth\d_windf\earexe.exe -np 2 -host cham-cfd2 \\cham-cfd1\d_earth\d_windf\earexe.exe

Users should create their own configuration and 'run' files, based on the examples provided, tailored to their own installation. These can either be located in \phoenics\d_utils\d_windf or the local working directory.

All Nodes in the cluster should belong to the same Workgroup or Domain, and the user should be logged into each Node on the Cluster using the same Workgroup/Domain User account and password.

A full PHOENICS installation must be made on the head node. A PHOENICS installation is strongly recommended on the other compute nodes, but it is not essential.

C:\phoenics\d_allpro\phoenics.lic C:\phoenics\d_allpro\coldat C:\phoenics\d_allpro\config C:\phoenics\d_allpro\prefix C:\phoenics\d_earth\earcon C:\phoenics\d_earth\propsThe files get_hostid.bat and lmhostid.exe (in c:\phoenics\d_allpro) will also be needed initially to identify the compute node HOSTID necessary for unlocking the software.

C:\phoenics\d_earth\d_windf\earexe.exeDuring a run of the parallel solver the processes on the compute nodes will need to read and write some working files. These are generally only of use to the program during a run or perhaps for diagnostic purposes if things go wrong, but they still need to be written somewhere. By default these will be in an equivalent directory to that used to start the run on the head node. So if the run was started from c:\phoenics\d_priv1 on the head node the user will need to make sure that there is a directory of the same name on each of the compute nodes.

If the parallel solver fails on start up from PHOENICS VR it may be that there is the wrong MPI setting in the CHAM.INI file. The error message may be similar to..

There are several markers here that indicate that it is trying to launch the parallel solver using the MPICH2 method, the first is the -localonly flag in the command line. This is only available in MPICH2, the second is the registry key errors, these again will only be seen with MPICH2.

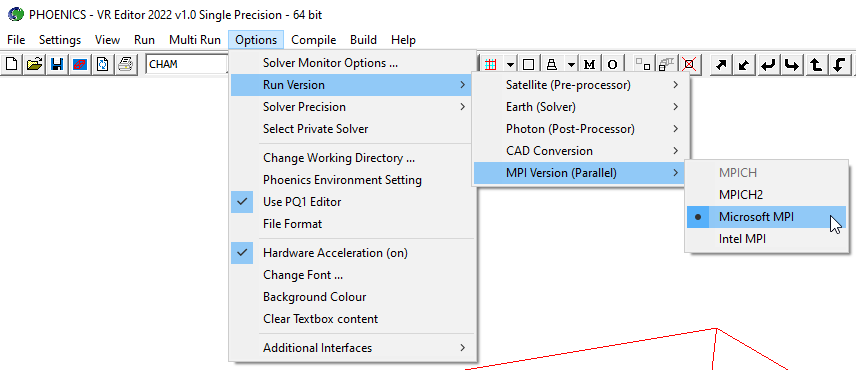

The MPI setting may be checked or reset via the Options menu in PHOENICS VR; for PHOENICS 2019 it should be set to Microsoft MPI.

The correct settings in CHAM.INI should be

[Earth] MAXFCT = 10000 MPI = msmpi NPROCS = 4

It may be necessary to update this setting in local cham.ini files for cases brought forward from earlier versions of PHOENICS.

One calls mpiexec to start a parallel run and then there appears to be no further response, the program appear to stall in the Command Prompt window. In the Task Manager Process list you find mpiexec.exe, but there are no instances of earexe.exe. If this occurs, it is likely that the you are picking up the wrong version of mpiexec.exe, so right-click on the mpiexec.exe process in the Task Manager and select the item Open File Location. It's likely this will not be in Microsoft MPI\bin. If you have installed the Intel Fortran compiler it may refer to their version of MPI. In this case one will need to modify the PATH, so that C:\Program Files\Microsoft MPI\bin (or where you have installed Microsoft MPI) occurs before any alternative MPI installs. Refer also to the previous item about setting version of MPI from PHOENICS VR.

For further information on Microsoft MPI see their web page, https://docs.microsoft.com/en-us/message-passing-interface/microsoft-mpi.